{{item.title}}

{{item.text}}

{{item.text}}

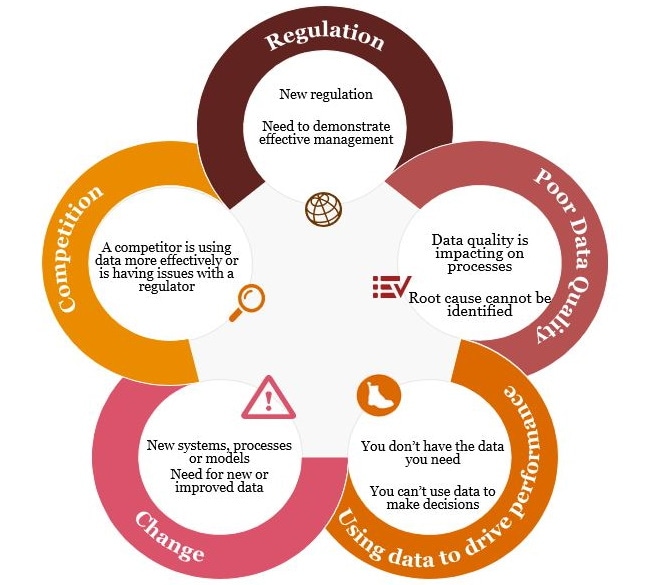

Inside an organisation, adequate data quality is vital for transactional and operational processes. Data quality may be affected by the way in which data is entered, handled and maintained. This means that quality always depends on the context in which it is used, leading to the conclusion that there is no absolute valid quality benchmark.

PwC’s Enterprise Data Quality (EDQ) framework offers an inside-out perspective to gauge the key data quality solutions of an organisation and design a comprehensive data quality roadmap to fast track the adoption of key quality initiatives:

Define Data Quality Scope and Approach

Define Data Quality Organisation

Define Data Quality Process

Develop Data Quality Technical Infrastructure

Provide Training

Rollout Data Quality Programme

The Bank collects customer information from various sources like: core banking, credit cards, loans, demat accounts and Third Party MF etc.

So the primary concern was a single customer view in the existing DW after de-duping/clustering. Apart from that, house-holding, standardisation and some augmentation were also part of the solution.

PwC team introduced a Data Quality Solution using SAS technology through which customer information needs to pass before stored in the data warehouse. Approach included:

The organisation wishes to review customer accessing and targeting processes and systems across its business lines (AMC, Insurance and Distribution) with a goal towards revenue upliftment and enabling operational efficiencies, especially in front-line processes, i.e. sales, services and marketing.

PwC was engaged to define functional requirements, analyse gaps w.r.t. current application systems, design technical & operational architecture and recommend tools/solutions (BI, ETL, MDM, CRM and Portal) for implementation in a phased manner.

{{item.text}}

{{item.text}}